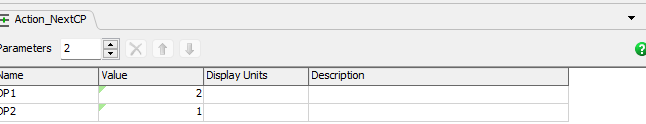

I am using MultiDiscrete in the action space, and the PPO algorithm is running correctly.

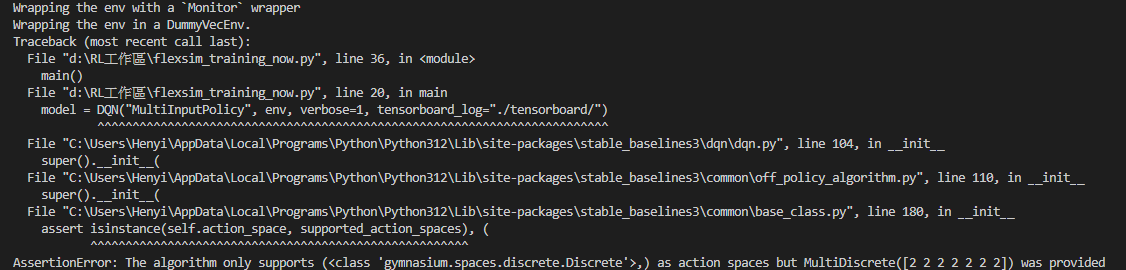

However, when I used DQN, it seems that DQN does not support MultiDiscrete action space.

Is it possible to convert MultiDiscrete to Discrete action space?

Thank you for your advise or the other method in advance.